Abstract: Reproducing the diverse and agile motor skills of animals has always been a long-term challenge in robotics. Although manually designed controllers have been able to simulate many complex behaviors, building such a controller involves a time-consuming and difficult development process, and usually requires a lot of expertise in the nuances of each skill. Reinforcement learning provides an attractive option for the manual work involved in the development of automation controllers. However, designing learning goals that can elicit the desired behavior from the agent may also require a lot of specialized skills. In this work, we propose an imitation learning system that enables legged robots to learn agile motor skills by imitating animals in the real world.

Quadruped robots learn motor skills by imitating dogs

Whether it is a dog chasing a dog or a monkey swaying in a tree, animals can easily show a wealth of agile motor skills. However, designing a controller that enables legged robots to replicate these agile behaviors can be a very difficult task. Compared with robots, the superior agility seen in animals may raise questions: Can we create more agile robot controllers with less energy by directly imitating animals?

In this work, we propose a framework for learning robot motor skills by imitating animals. Given a reference motion clip recorded from an animal (such as a dog), our framework will use reinforcement learning to train a control strategy that enables the robot to imitate the motion in the real world. Then, simply by providing the system with different reference motions, we can train the quadruped robot to perform a variety of agile behaviors, from fast gait to dynamic jumping. These strategies are mainly trained in simulation, and then transferred to the real world using latent space adaptation technology, which can effectively adapt the strategy using only a few minutes of data from a real robot.

Framework

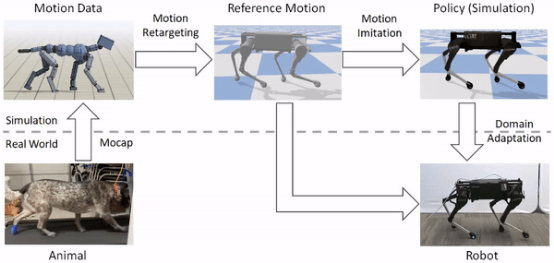

Our framework consists of three main parts: movement reorientation, movement imitation and domain adaptation. 1) First, given a reference motion, the motion reorientation stage will map the motion from the shape of the original animal to the shape of the robot. 2) Next, the motion simulation phase uses the redirected reference motion to train the strategy used to simulate the motion. 3) Finally, the domain adaptation stage transfers the strategy from simulation to the real robot through an effective example domain adaptation process. We apply this framework to learn the various agile motor skills of the Laikago quadruped robot of Yushu Technology.

The framework includes three stages: movement reorientation, movement imitation and domain adaptation. It receives movement data recorded from animals as input and outputs control strategies that enable the robot to reproduce movement in the real world

Movement redirection

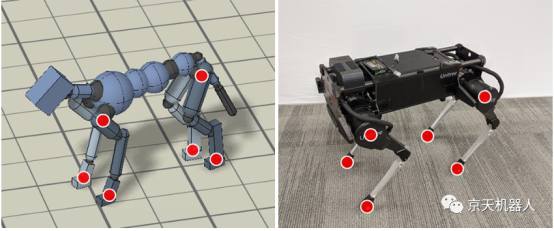

The body of an animal is usually completely different from the body of a robot. Therefore, before the robot can imitate the actions of animals, we must first map the actions to the body of the robot. The goal of the relocation process is to construct a reference motion for the robot to capture important features of animal motion. To this end, we first determine a set of source key points on the animal's body, such as hips and feet. Then, specify the corresponding target key points on the body of the robot.

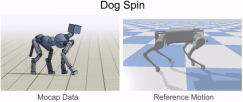

Inverse kinematics (IK) is used to reposition the motion capture clips recorded from the real dog (left) to the robot (right). The corresponding key point pair (red) is specified on the body of the dog and the robot, and then IK is used to calculate the pose for the robot that tracks the key point.

Next, inverse kinematics is used to construct a reference motion for the robot, which tracks the corresponding key points of the animal at each point in time.

Inverse kinematics is used to reposition the motion capture clips recorded by the dog to the robot

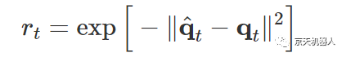

Motion imitation

After reorienting the reference motion to the robot, the next step is to train the control strategy to mimic the reorientated motion. But reinforcement learning algorithms take a long time to learn effective strategies, and training directly on real robots can be very dangerous. Therefore, we instead choose to perform most of the training in a comfortable simulation environment, and then use more effective sample adaptation techniques to transfer the learned strategies to the real world. All simulations are performed using PyBullet.

策略

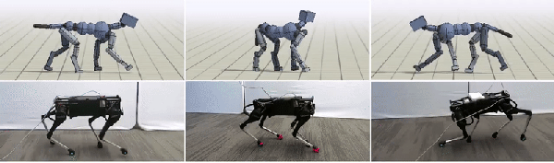

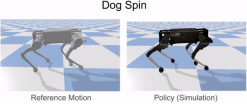

By simply using different reference actions in the reward function, we can train a simulated robot to imitate a variety of different skills.

Reinforcement learning is used to train simulated robots to imitate the reorientated reference motion

Field adaptation

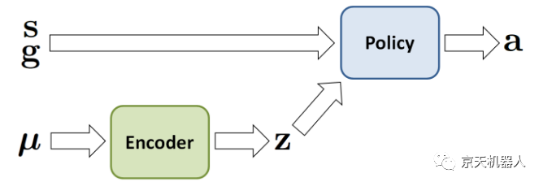

Since simulators usually only provide a rough approximation of the real world, strategies trained in simulation usually do not work well when deployed on actual robots. Therefore, in order to transfer the simulated training strategy to the real world, we use the example effective domain adaptation technology, which only needs a few experiments on real robots to adapt the strategy to the real world. To this end, we first apply field randomization in simulation training to randomly change dynamic parameters, such as mass and friction. Then, the kinetic parameters are also collected into the vector μ, and encoded as a latent representation z by the encoder E(z|μ). The latent code is passed as an additional input to the strategy π(a|s, g, z).

The simulated kinetic parameters will change during training and will also be encoded as a latent representation, which will be provided as an additional input to the strategy

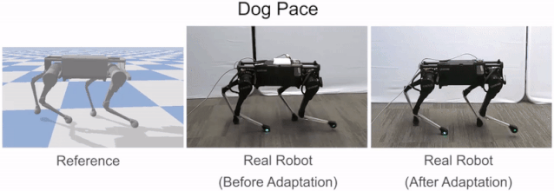

When transferring the strategy to a real robot, we will delete the encoder, and then directly search ž Maximize the return of the robot in the real world. This is done using dominance weighted regression (a simple non-policy reinforcement learning algorithm). In our experiments, the technology is usually able to adapt the strategy to the real world through less than 50 trials, which is equivalent to about 8 minutes of real data.

Before adaptation, the robot is prone to fall. After adaptation, these strategies can better perform the required skills

Result

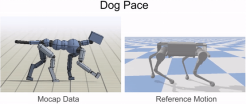

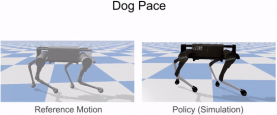

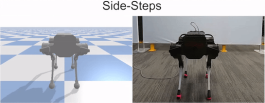

Our framework is able to train the robot to imitate the various motor skills of the dog, including different walking gaits (such as pacing and trotting) and fast rotating movements. We can also train the robot to walk backwards by simply playing the backward walking motion forward.

Click the link to watch the video:https://mp.weixin.qq.com/s/BvwEFXWNylGFPraHJdxSmQ

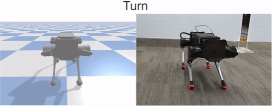

In addition to imitating the movements of real dogs, we can also imitate the key frame movements of the artist's animation, including dynamic turns:

Skills learned to mimic the movement of animation keyframes

We also compared the learned strategy with the manually designed controller provided by the manufacturer. Our policy can learn faster gait.

Comparison of the learned trotting attitude with the built-in gait provided by the manufacturer

Click the link to watch the above animation:https://mp.weixin.qq.com/s/BvwEFXWNylGFPraHJdxSmQ

In general, our system has been able to reproduce various behaviors with quadruped robots. However, due to hardware and algorithm limitations, we cannot imitate more dynamic movements, such as running and jumping. The strategies learned are not as powerful as the best manually designed controllers. Exploring techniques to further improve the agility and robustness of these learning strategies may be an important step towards more complex practical applications. Extending the framework to learn skills from videos will also be an exciting direction, which can greatly increase the amount of data that robots can learn from.

References: Peng X B, Coumans E, Zhang T, et al. Learning Agile Robotic Locomotion Skills by Imitating Animals[J]. arXiv preprint arXiv:2004.00784, 2020.

Source address: https://github.com/google-research/motion_imitation

Unitree Technology laikago: http://www.unitree.cc/cn/e/action/ShowInfo.php?classid=6&id=1

Donghu Robot Laboratory, 2nd Floor, Baogu Innovation and Entrepreneurship Center,Wuhan City,Hubei Province,China

Tel:027-87522899,027-87522877

Robot System Integration

Artificial Intelligence Robots

Mobile Robot

Collaborative Robotic Arm

ROS modular robot

Servo and sensor accessories

Scientific Research

Professional Co Construction

Training Center

Academic Conference

Experimental instruction

Jingtian Cup Event