Army researchers have developed a reinforcement learning method that will enable unmanned aerial and ground vehicles to perform various tasks optimally in groups, while minimizing performance uncertainty.

Grouping is a method of operation in which multiple autonomous systems act as cohesive units by actively coordinating their actions.

Army researchers say that future multi-domain battles will require groups of dynamic coupling, coordinated heterogeneous mobile platforms to be on par with enemy capabilities and threats to the US military.

Dr. Jemin George of the Army Research Laboratory of the U.S. Army Combat Capability Development Command said the Army is seeking to use technology to perform time-consuming or dangerous tasks.

George said: "Finding the best guidance strategy for these crowded vehicles in real time is a key requirement for enhancing the fighter's tactical awareness and enabling the U.S. military to monopolize its head in a highly competitive environment."

Reinforcement learning provides a method to optimally control uncertain agents to achieve multi-objective goals when accurate models cannot be used. However, the existing reinforcement learning solutions can only be applied in a centralized manner, which requires that the state information of the entire group be concentrated at the central learner. George said that this greatly increases computational complexity and communication requirements, resulting in unreasonable learning time.

In order to solve this problem, George worked with Professor Aranya Chakrabortty of North Carolina State University and Professor He Bai of Oklahoma State University to solve large-scale multi-agent reinforcement learning problems. The Army provided funding for this work through the Director of the External Cooperation Program Research Award, a laboratory program designed to work with external partners to stimulate and support new and innovative research.

The main goal of this work is to develop a theoretical basis for data-driven optimal control of large-scale swarm networks. In this model, control actions will be based on low-dimensional measurement data rather than dynamic models.

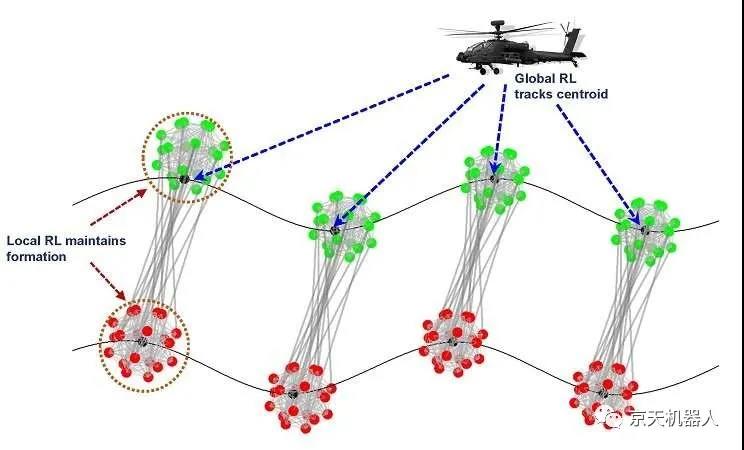

The current method is called "Hierarchical Reinforcement Learning" (HRL), which decomposes global control objectives into multiple hierarchical structures, namely, multiple group-level micro-controls and broad group-level macro-controls.

George said: "Each level has its own learning cycle with local and global rewards." "By running these learning cycles in parallel, we can greatly reduce the learning time."

Army researchers envisioned hierarchical control for the coordination of ground and air vehicles

According to George, the online reinforcement learning control of the group boils down to the use of system or group input/output data to solve the large-scale algebraic matrix Riccati equation.

The researchers' initial method to solve this large-scale matrix Riccati equation is to divide the population into multiple smaller groups and perform group-level local reinforcement learning in parallel, while performing global reinforcement learning under the smaller size compression state of each group.

Their current HRL scheme uses a dissipation mechanism that allows the team to first solve the local reinforcement learning problem, and then synthesize the global control from the local controller (by solving the least squares problem), thereby solving the large matrix equation hierarchically . The process of global reinforcement learning in the aggregate state. This further reduces the learning time.

Experiments show that compared with centralized methods, HRL can reduce learning time by 80% while limiting the optimal loss to 5%.

George said: "Our current efforts in human resource management will allow us to develop control policies for unmanned aerial and ground vehicle fleets so that they can optimally complete different sets of tasks, even if these groups are The personal motivations of the agents are unclear."

George said that he believes that this research will have an impact on the future battlefield, and it has become possible through innovative cooperation.

George said: "The core purpose of the ARL science and technology community is to create and use scientific knowledge to achieve transformational excessive competition." "By conducting external research through ECI and other cooperative mechanisms, we hope to conduct destructive basic research, which will lead to The Army’s modernization has also become the main cooperation link between the Army and the world’s scientific community."

The team is currently working to further optimize its HRL control scheme by considering the best grouping of agents in the group to minimize the complexity of calculation and communication while limiting the optimal gap.

They also study the use of deep recurrent neural networks to learn and predict the best grouping patterns, as well as the application of advanced technologies for optimal coordination of advanced air and ground vehicles in multi-domain operations in dense urban terrain.

George and ECI partners recently organized and hosted an invited virtual conference on multi-agent reinforcement learning at the 2020 American Control Conference, where they presented their research results.

原文:Bai H, George J, Chakrabortty A. Hierarchical control of multi-agent systems using online reinforcement learning[C]//2020 American Control Conference (ACC). IEEE, 2020: 340-345.

Donghu Robot Laboratory, 2nd Floor, Baogu Innovation and Entrepreneurship Center,Wuhan City,Hubei Province,China

Tel:027-87522899,027-87522877

Robot System Integration

Artificial Intelligence Robots

Mobile Robot

Collaborative Robotic Arm

ROS modular robot

Servo and sensor accessories

Scientific Research

Professional Co Construction

Training Center

Academic Conference

Experimental instruction

Jingtian Cup Event