Introduction: Can imitating robots quickly replace humans in some repetitive and manual tasks in the gardening field?

This project will explore the use of a new human-computer interaction soft robot system and its application in the semi-automatic reproduction of a variety of ornamental plants. It will study the use of non-professional users (that is, users without technical expertise), and the robot is proficient in factory processing after programming and control. Robots can be used at work to reduce the burden of repetitive and labor-intensive tasks encountered by workers.

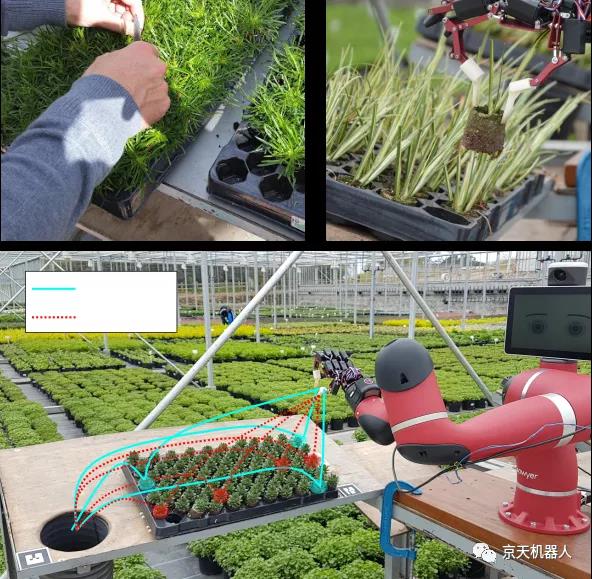

The figure below shows the use of Sawyer's collaborative robotic arm from Risenco for gardening sorting tasks. Here, unhealthy plants must be removed from the plant tray. The key to this is to be able to generalize from the demo trajectory (cyan) provided by the user to determine a suitable trajectory for picking plants from a previously unseen location (red).

The difficult situation of recruiting and retaining labor for horticultural enterprises is more severe than ever. For a long time, higher levels of robotics and automation have been regarded as one of the potential ways to solve this challenge, but this can only be achieved if we can overcome some of the unique technical challenges raised by the industry.

Certain forms of automation such as tray fillers and planters are already familiar, and driverless tractors are not just a dream. When dealing with the crops themselves, the progress of robot manipulation is much slower.

Robots work best in highly deterministic environments — knowing exactly where to find machine parts or how much it can press on surfaces — but it is rarely that clear on plants.

A new generation of so-called "collaborative" robots can provide some solutions for this type of gardening operation. The design of collaborative robots is inherently safe and can work with people, and its design purpose is to enable people without programming background or any professional robotics knowledge to quickly and easily set them up to take on simple tasks and do it later Reconfigure to perform other work.

Click the link to view video:https://mp.weixin.qq.com/s/8wI7Tgbk5JsmEdw-9VlW4w

Through "imitation learning", growers will perform the automated tasks they want, while the robot monitors their behavior through its vision system and by connecting to sensors embedded in the "smart gloves" worn by the growers.

This process is divided into three stages, starting with the collection of sensor data while the user is performing a task-this includes data describing movement, interaction forces, visual records, the position of the object the user is working on, etc. This provides a lot of data for the robot, but at this stage, it is actually just a string of 1s and 0s.

In order to obtain useful information from the task, we next have an "inference phase" in which the robot's machine learning algorithm extracts the task information from the raw data. This is a way to make the robot "understand" the user's intention from the demo, so when it encounters a special situation, the robot will know what to do-for example, when a pot is placed in front of it, it should choose and Transfer to the tray.

Finally, after determining the appropriate measures for a specific situation, the system must translate it into action. The system must move quickly, safely, and accurately to effectively perform the learned operations. Modern controllers for collaborative robots can make rigid systems operate in a compliant or "soft" manner, thereby greatly reducing the risks involved in accidental collisions with the surrounding environment. However, the overall performance of the system does depend on the quality of the data provided to it by the personnel.

Although imitation learning provides a promising mechanism for how to transfer human skills to robots, an important research question is whether people who do not have robotics or programming expertise, such as growers, can successfully demonstrate to robots .

When performing tasks in the collection phase, you are "teaching" the robot, not only in one situation, but in multiple situations, showing how the robot completes the task. For example, if you want to demonstrate how to pick up a pot, you will show the robot how to pick up a pot from various positions so that it can handle situations where the pot may not be completely in front of the pot. In the same place every time.

If these learning systems are to be used directly by growers, we need to be able to guide people to adopt good teaching habits. The initial research was conducted with volunteers from J&A Growers, with the goal of better understanding how people who are not robotics experts interact with robotic systems. At the same time, we have been using commercially available industrial collaborative robots to develop our prototype system and have conducted trials in commercial nurseries. "

Click the link to view video:https://mp.weixin.qq.com/s/8wI7Tgbk5JsmEdw-9VlW4w

The video demonstrates how to use the Gaussian mixture regression of task parameterization, and use the Rethink Robotics Sawyer robot with Active8 AR10 10 degrees of freedom dexterous hand to encode the generalized tray cleaning task.

References:

1.Sena A, Zhao Y, Howard M J. Teaching Human Teachers to Teach Robot Learners[C]//ICRA. 2018: 1-7.

2.Sena A, Michael B, Howard M. Improving Task-Parameterised Movement Learning Generalisation with Frame-Weighted Trajectory Generation[J]. arXiv preprint arXiv:1903.01240, 2019.

PbDlib open source framework:

PbDlib is a collection of source codes for robot programming through demonstration (learning from the demonstration). It includes various functions on the intersection of statistical learning, dynamic systems, differential geometry and optimal control.

https://www.idiap.ch/software/pbdlib/

Donghu Robot Laboratory, 2nd Floor, Baogu Innovation and Entrepreneurship Center,Wuhan City,Hubei Province,China

Tel:027-87522899,027-87522877

Robot System Integration

Artificial Intelligence Robots

Mobile Robot

Collaborative Robotic Arm

ROS modular robot

Servo and sensor accessories

Scientific Research

Professional Co Construction

Training Center

Academic Conference

Experimental instruction

Jingtian Cup Event