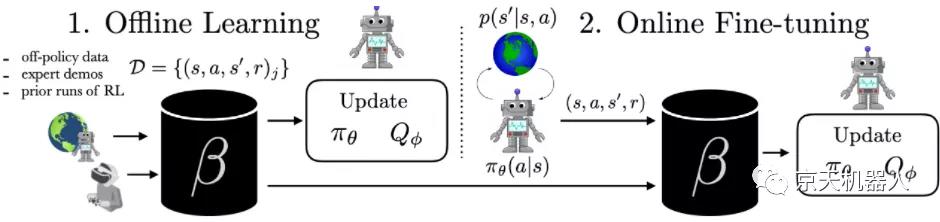

This method learns complex behaviors by performing offline training from previous data sets (expert presentations, previous experimental data or random exploration data), and then quickly fine-tuning through online interactions

Robots trained in reinforcement learning (RL) have the potential to be used for various challenging real-world problems. To apply RL to a new problem, it is usually necessary to set up the environment, define the reward function, and train the robot to solve the task by allowing the robot to explore the new environment from scratch. Although this may eventually work, these "online" RL methods are very data intensive, and this data-inefficient process is repeated for each new problem, making it difficult to apply online RL to real-world robotics problems. . If we can reuse data between multiple questions or experiments, instead of repeating the data collection and learning process from scratch each time. In this way, we can greatly reduce the burden of data collection for each new problem encountered.

The first step in moving RL to a data-driven paradigm is to consider the general concept of offline (preprocessing) RL. Offline RL considers the problem of learning the best strategy from arbitrary non-policy data without any further exploration. This eliminates data collection problems in the RL and merges data from other sources (including other robots or remote controls). However, depending on the quality of the available data and the problems to be solved, we often need to enhance offline training through targeted online improvements. In fact, this problem setting has unique challenges. In this blog post, we discussed how to transfer RL from training on each new problem from scratch to a paradigm that can effectively reuse previous data, perform some offline training, and then perform online fine-tuning.

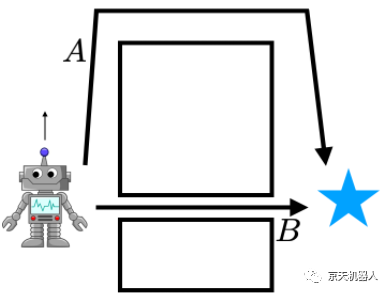

Figure 1: The problem of using offline data sets to accelerate online RL. In (1), the robot learns the strategy completely from the offline data set. In (2), the robot can interact with the world and collect strategy samples to improve the strategy beyond the scope of offline learning.

We use the standard benchmark HalfCheetah mobile task to analyze the challenges of learning from offline data and subsequent fine-tuning problems. The following experiment was performed using a previous data set containing 15 demonstrations from expert strategies and 100 sub-optimal trajectories sampled from these demonstrated behavioral clones.

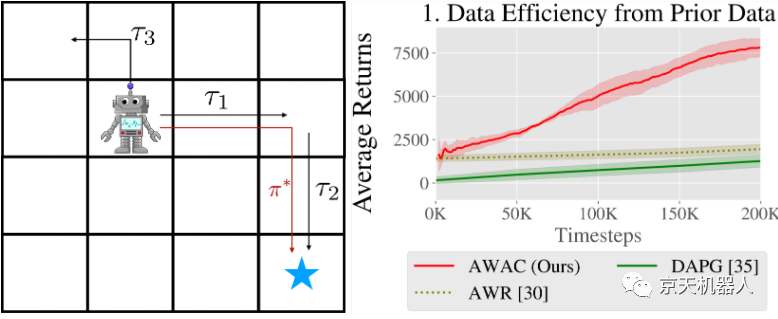

Figure 2: Compared with non-strategic methods, strategy-based methods are slower to learn. This is because non-strategic methods can "stitch" good trajectories, as shown in the left picture. Right: In practice, we see slow online improvement Use a strategic approach.

1. Data efficiency

A simple way to use previous data such as RL demos is to pre-train the strategy through imitation learning and fine-tune it through a strategy-based RL algorithm (such as AWR or DAPG). This has two disadvantages. First, the prior data may not be optimal, so imitation learning may be ineffective. Second, policy-based fine-tuning is data inefficient because it will not reuse previous data in the RL phase. For real-world robotics, data efficiency is crucial. Consider the robot on the right, trying to reach the target states T1 and T2 with the previous trajectory. The strategic method cannot effectively use this data, but it can effectively "stitch" the out-of-strategic algorithms T1 and T2 for dynamic programming and the use of value functions or models. This effect can be seen in the learning curve in Figure 2, where the strategy-based method is an order of magnitude slower than the strategy-based participant criticism method.

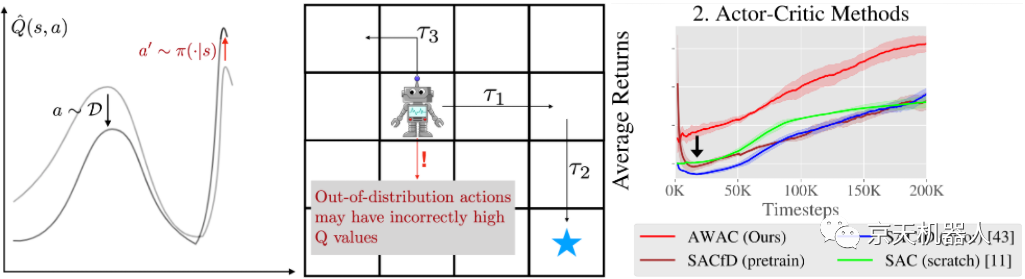

Figure 3: When using the offline strategy RL for offline training, boot errors are a problem. Left: This strategy uses the wrong Q value far away from the data, which leads to poor update of the Q function. Medium: Therefore, the robot may perform actions beyond the assigned range. Correct: Booting errors can lead to poor offline pre-training when using SAC and its variants.

In principle, this method can use Bellman's self-estimation of future return value estimation V(s) or action value estimation Q(s, a), which can efficiently learn from non-policy data. However, when standard non-strategic participant criticism methods are applied to our problem (we use SAC), their performance is poor, as shown in Figure 3: Although there is a data set in the replay buffer, these algorithms Did not significantly benefit from offline training (as can be seen by comparing the SAC (from scratch) and SACfD (first) rows in Figure 3). In addition, even if the policy has been pre-trained through behavioral cloning ("SACfD (pre-training)"), we still observe an initial drop in performance.

This challenge can be attributed to the accumulation of misguided out-of-strategies. During training, the Q estimate will not be completely accurate, especially when inferring actions that do not exist in the data. The strategy update uses the overestimated Q value to make the estimated Q value worse. The problem is shown in the figure: the wrong Q value causes the wrong update of the target Q value, which may cause the robot to take poor measures.

3. Non-stationary behavior model

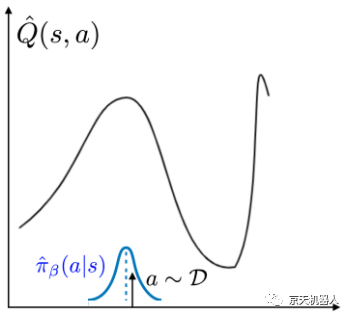

Existing offline RL algorithms such as BCQ, BEAR, and BRAC propose to solve the booting problem by preventing the strategy from deviating too far from the data. The key idea is to prevent boot errors by restricting the strategy π to be close to the "behavior strategy" πβ, that is, replaying the actions that exist in the buffer. The figure below illustrates this idea: by sampling actions from πβ, the use of wrong Q values far from the data distribution can be avoided.

However, πβ is usually unknown, especially for offline data, which must be estimated from the data itself. Many offline RL algorithms (BEAR, BCQ, ABM) explicitly fit the parametric model to samples of the πβ distribution from the replay buffer. After the estimated value is formed, existing methods implement policy constraints in various ways, including penalty for policy update (BEAR, BRAC) or architecture selection for sampling actions for policy training (BCQ, ABM).

Although the offline RL algorithm with constraints performs well in the offline state, it is still difficult to improve by fine-tuning, as shown in the third graph in Figure 1. We see that pure offline RL performance ("0K" in Figure 1) is much better. Than SAC. However, through other iterations of online fine-tuning, the performance improvement is very slow (as can be seen from the slope of the BEAR curve in Figure 1). What causes this phenomenon?

The problem is that when collecting data online during fine-tuning, an accurate behavioral model must be established. In the offline setting, the behavior model only needs to be trained once, but in the online setting, the behavior model must be updated online to track the incoming data. Training density models online (in a "streaming" environment) is a challenging research problem. The mixture of online and offline data leads to potentially complex multi-modal behavior distributions, which makes it more difficult. In order to solve our problem, we need an out-of-policy RL algorithm that constrains the strategy to prevent offline instability and error accumulation, but it is not too conservative to be online due to imperfect behavior modeling Fine-tuning. Our proposed algorithm (discussed in the next section) is implemented by using implicit constraints.

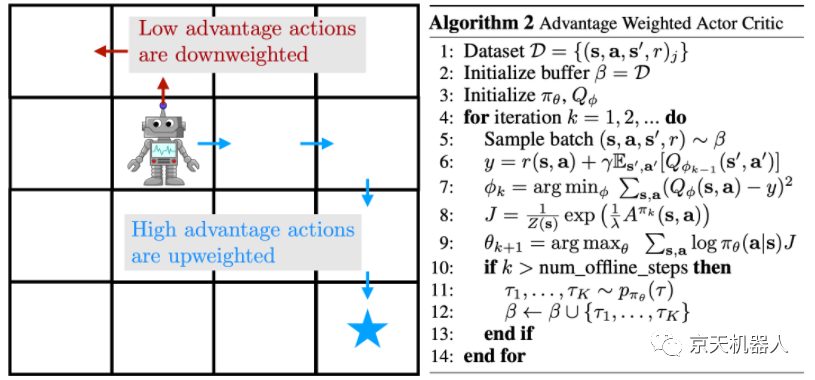

Figure 4: Schematic diagram of AWAC. High-weight transitions will return with high weights, and low-weight transitions will return with low weights. Right: Algorithm pseudo code.

So, how effective is this in solving the problems we raised earlier? In our experiments, we show that we can learn difficult, high-dimensional, sparse reward dexterous manipulation problems from human demonstration and non-policy data. Then, we evaluate our method using the second-order empirical data generated by the stochastic controller. This article also includes the results of the standard MuJoCo benchmark environment (HalfCheetah, Walker, and Ant).

Dexterous manipulation

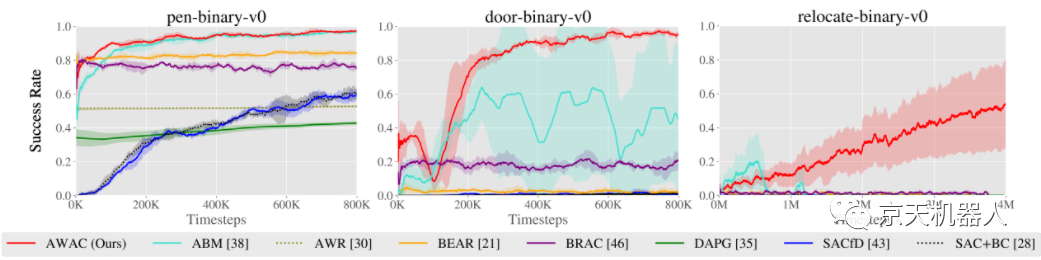

Figure 5. Top: Performance of various methods displayed after online training (pen: 200K steps, gate: 300K steps, relocation: 5M steps). Below: shows the learning curve for agile operation tasks with sparse rewards. Step 0 corresponds to starting online training after offline pre-training.

Our goal is to study tasks that represent the difficulty of learning for real-world robots, the most important of which are offline learning and online fine-tuning. One of the settings is a set of dexterous operation tasks proposed by Rajeswaran et al. in 2017. These tasks involve complex manipulative skills using the 28-degree-of-freedom five-finger hand in the MuJoCo simulator: the pen's hand rotates, the door is opened by unlocking the handle, and the sphere is picked up and repositioned to the target position. These environments face many challenges: high-dimensional motion spaces, complex manipulation physics with many intermittent contacts, and random hand and object positions. The reward function in these environments is a binary 0-1 reward for task completion. Rajeswaran et al. Provide 25 manual demonstrations for each task. Although these demonstrations are not completely optimal, they can indeed solve the task. Since this data set is very small, we constructed a behavioral cloning strategy and then sampled from this strategy to generate 500 interactive data trajectories.

First, we compared the previously described method of dexterously operating tasks with previous autonomous methods of non-strategic learning, offline learning and demonstration. The result is shown in the figure above. Our method uses previous data to quickly obtain good performance, and the adjustment speed of the critical part of our method that effectively departs from the policy actors is much faster than that of DAPG. For example, our method solves the pen task step by step in 120K time, which is equivalent to only 20 minutes of online interaction. Although baseline comparison and ablation can make some progress on pen tasks, alternative out-of-policy RL and offline RL algorithms are largely unable to solve door opening and relocation tasks within the considered time frame.

The advantage of using RL without strategy for reinforcement learning is that we can also merge sub-optimal data, not just demonstrations. In this experiment, we use the Sawyer robot to evaluate in a simulated desktop push environment.

The advantage of using RL without strategy for reinforcement learning is that we can also merge sub-optimal data, not just demonstrations. In this experiment, we use the Sawyer robot to evaluate in a simulated desktop push environment.

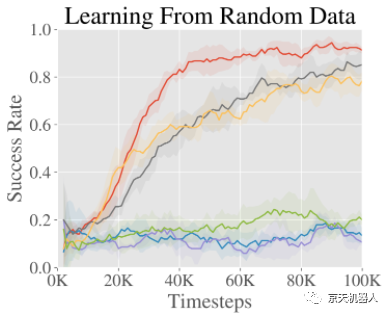

To study the potential of learning from sub-optimal data, we used a non-policy data set of 500 trajectories generated by a random process. The task is to push the subject into a target position in a 40cm x 20cm target space.

The results are shown in the figure on the right. We have seen that although many methods start with the same initial performance, AWAC can learn the fastest online and can actually use offline data sets effectively, which is the opposite of some methods that cannot be learned at all.

Being able to use previous data and quickly make fine-tuning on new issues opens up many new avenues for research. We are very excited about the use of AWAC from single-task mechanism in RL to multi-task mechanism and data sharing and generalization between tasks. The advantage of deep learning lies in its ability to generalize in an open world environment. We have seen that it changes the fields of computer vision and natural language processing. In order to achieve the same type of generalization in robotics, we will need an RL algorithm that utilizes a large amount of prior data. But one major difference in robotics is that collecting high-quality data for a task is very difficult-usually as difficult as solving the task itself. This is in contrast to, for example, computer vision, where people can mark data. Therefore, active data collection (online learning) will become an important part of the puzzle.

This work also proposes many algorithm directions. Note that in this work, we focus on the mismatched action distribution between the strategy π and the behavioral data πβ. In the case of non-policy learning, the marginal state distributions between the two do not match either. Intuitively, consider the problems of two solutions A and B, where B is a higher-yielding solution, and non-policy data illustrates the provided solution A. Even if the robot finds solution B during online browsing, the non-strategic data still mainly contains data from path A. Therefore, the Q function and strategy update are calculated for the states encountered when traversing path A, even if it will not encounter these states when performing the best strategy. This issue has been studied before. Taking into account that the two types of distributions do not match, it may lead to the adoption of a better RL algorithm.

Finally, we are already using AWAC as a tool to speed up research. When we set out to solve a task, we usually don't try to solve it from scratch using RL. First, we can remotely control the robot to confirm that the task can be solved; then we may conduct some hard-coded strategies or behavioral cloning experiments to see if simple methods can solve it. Using AWAC, we can save all the data in these experiments, as well as other experimental data (such as the data when the hyperparameter scans the RL algorithm), and use it as the previous data of the RL.

References: Nair A, Dalal M, Gupta A, et al. Accelerating Online Reinforcement Learning with Offline Datasets[J]. arXiv preprint arXiv:2006.09359, 2020.

Open source RLkit: https://github.com/vitchyr/rlkit

Donghu Robot Laboratory, 2nd Floor, Baogu Innovation and Entrepreneurship Center,Wuhan City,Hubei Province,China

Tel:027-87522899,027-87522877

Robot System Integration

Artificial Intelligence Robots

Mobile Robot

Collaborative Robotic Arm

ROS modular robot

Servo and sensor accessories

Scientific Research

Professional Co Construction

Training Center

Academic Conference

Experimental instruction

Jingtian Cup Event