In the past decade, one of the biggest driving forces for the success of machine learning has been the generation of high-capacity models such as neural networks and large data sets such as ImageNet to produce accurate models. Although we have seen that deep neural networks have been successfully applied to reinforcement learning (RL) in areas such as robotics, poker, board games, and team-based video games, the main obstacle to making these methods solve practical problems is the difficulty of collecting online data on a large scale. . Online data collection is not only time-consuming and expensive, but also dangerous in areas such as driving or healthcare that are critical to safety. For example, it is unreasonable to let the reinforcement learning agent explore, make mistakes and learn while controlling autonomous vehicles or treating patients in hospitals. This allows us to learn from the experience collected in advance. We are fortunate that in many fields, large data sets already exist for applications such as self-driving cars, healthcare or robotics. Therefore, the ability of RL algorithms to learn offline from these data sets (called offline settings) has a huge potential impact on shaping the way we build future machine learning systems.

在非策略RL中,该算法从在线探索或行为策略中收集的经验中学习

In offline RL, we assume that all experiences are collected offline, fixed, and other data cannot be collected

The main method for deep RL under the baseline has always been limited to a single scheme: from a data set generated from some random or previously trained policies, the goal of the algorithm is to improve performance than the original policy [ie, 1, 2 , 3, 4, 5, 6]. The problem with this method is that the real-world data set cannot be generated by a single RL training strategy. Unfortunately, it is known that many situations not covered by this evaluation method are problematic for the RL algorithm. This makes it difficult to know how our algorithm performs in actual use outside of these benchmark tasks.

In order to develop effective algorithms for offline RL, we need a widely used benchmark that is easy to use and can accurately measure the progress of this problem. The use of real-world data in autonomous driving can be a good indicator of progress, but the evaluation of algorithms is a challenge. Most research laboratories do not have the resources to deploy their algorithms on actual vehicles and cannot test whether their methods are truly effective. To fill the gap between realistic but infeasible real-world tasks and somewhat lacking but easy-to-use simulation tasks, we recently introduced the D4RL benchmark (a dataset for deep data-driven reinforcement learning) for offline RL. The goal of D4RL is simple: we propose a dimensional task aimed at solving offline RL problems, which may make practical applications difficult, while keeping the entire benchmark in the analog domain so that any researcher around the world can be effective Evaluate its methods. Overall, the D4RL benchmark test covers more than 40 tasks in 7 areas that are completely different in quality, covering application areas such as robot manipulation, navigation, and autonomous driving.

What attributes make offline RL difficult?

In a previous blog post, we discussed that it is usually not enough to run conventional out-of-policy RL algorithms on offline problems, and can lead to algorithm divergence in the worst case. In fact, there are many factors that will cause the performance of the RL algorithm to degrade. We use these factors to guide the design of D4RL.

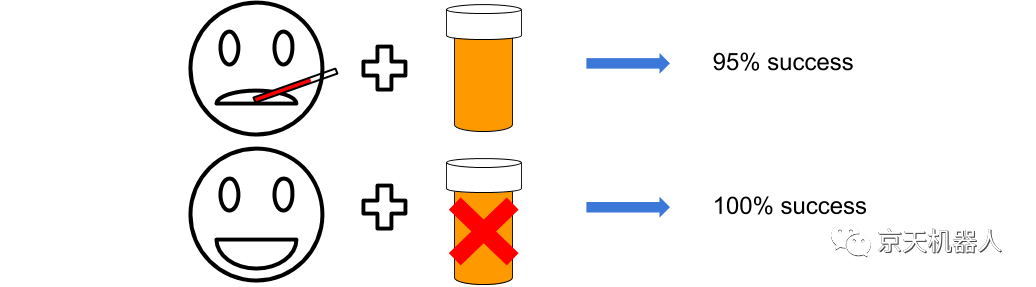

Narrow and biased data distribution is a common attribute in real data sets, which may cause problems for offline RL algorithms. By narrow data distribution, we mean that the data set lacks obvious coverage in the problem state-action space. A narrow data distribution does not in itself mean that the task cannot be solved-for example, expert presentations often produce a narrow distribution, which makes learning difficult. One intuitive reason why it is difficult to learn narrow data distributions is that they often lack the errors required for algorithm learning. For example, in healthcare, data sets usually focus on severe cases. We may only see severely ill patients receiving medication (a small part of their lives), and mildly ill patients sent home without treatment (almost all patients are alive). Naive algorithms can tell that treatment will lead to death, but this is only because we have never seen sick patients untreated, so we will know that the survival rate of treatment is much higher.

The data generated from unrepresentable strategies may come from several realities. For example, human demonstrators can use clues that are not observable by the RL agent, causing some observability problems. Some controllers can use state or memory to create data that cannot be represented by any Markov strategy. These situations may cause multiple problems in the offline RL algorithm. First, it has been proved that the inability to represent the policy category will cause bias in the Q learning algorithm. Second, the key step in many offline RL algorithms (for example, algorithms based on importance weighting) is to estimate the probability of actions in the data set. Failure to represent a strategy for generating data may lead to other sources of error.

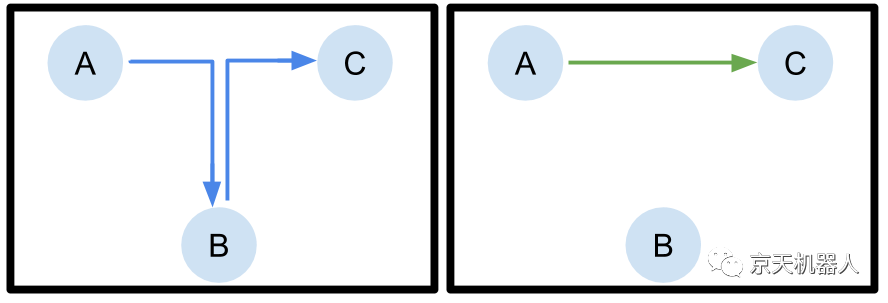

Multitasking and undirected data are attributes that we think will be popular in cheap large data sets. Imagine if you are an RL practitioner and want to obtain a lot of data to train conversation agents and personal assistants. The easiest way to do this is to simply record the conversations between real humans, or get real conversations from the Internet. In this case, the recorded conversation may not have anything to do with the specific task you want to complete, such as booking a flight. However, many parts of the conversation flow may be useful not only for the observed conversation but also for other objects. One way to visualize this effect is the following example. Imagine if an agent tries to get from point A to point C, but only observes the path from point A to point B and from point B to point C.

Suboptimal data is another attribute we hope to observe in actual data sets, because it may not always be feasible to conduct expert demonstrations for each task we wish to solve. In many fields, such as robotics, providing expert presentations is tedious and time-consuming. The main advantage of using RL instead of methods such as imitation learning is that RL provides us with clear signals on how to improve our strategy, which means that we can learn and improve from a wider data set.

D4RL task

To capture the attributes we outlined above, we introduced tasks that span many qualitatively different areas. Except for Maze2D and AntMaze, all other fields were originally proposed by other ML researchers. We have adjusted their work and generated a dataset that can be used in offline RL settings.

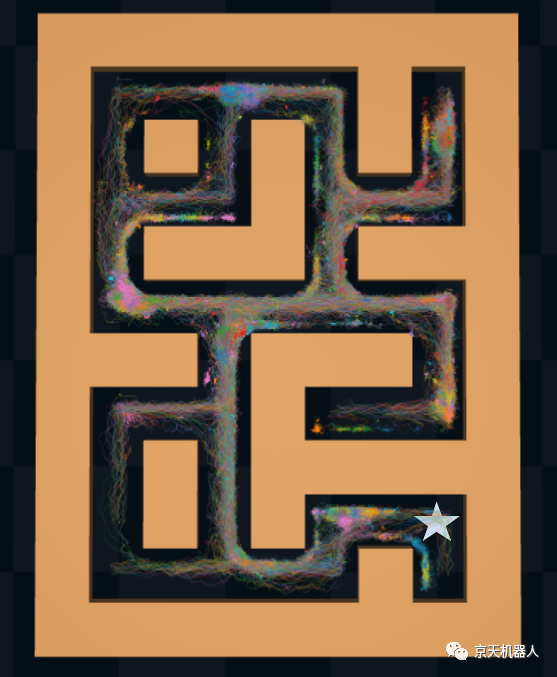

· We start with 3 navigation domains that are increasingly difficult. The simplest domain is the Maze2D domain, which tries to navigate the ball along the 2D plane to the target location. There are 3 possible maze layouts (umaze, medium and large). AntMaze replaces the ball with an "Ant" quadruped robot, which provides more challenging low-level motion problems.

The navigation environment allows us to extensively test the "multitasking and undirected" data attributes, because existing trajectories can be "stitched" together to solve tasks. The trajectories in the dataset of the large AntMaze task are shown below, each trajectory is drawn in a different color, and the target is marked with an asterisk. By learning how to change the use of existing trajectories, agents can potentially solve tasks without having to rely on extrapolating to unseen areas of the state space.

For realistic, vision-based navigation tasks, we use the CARLA simulator. This task adds a layer of perception challenge to the above two Maze2D and AntMaze tasks.

We also use Adroit (based on the Shadow Hand robot) and Franka platform, including 2 realistic robot manipulation tasks. The Adroit domain contains 4 separate operational tasks, as well as human demonstrations recorded through motion capture. This provides a platform for studying the use of human-generated data in a simulated robotic platform.

The Franka kitchen environment places the robot in the current Franka kitchen environment, and can freely interact with objects. These include turning on the microwave oven and various cabinets, moving the kettle, and turning on the lights and burners. The task is to achieve the desired target configuration of the objects in the scene.

Future direction

In the near future, we are very happy to see offline RL applications move from the analog domain to the actual domain where a large amount of offline data can be easily obtained. In many practical problems, offline RL may have a significant impact, including:

· Autopilot

· robot technology

· Medical care and treatment plan

· educate

· Recommended system

· Dialogue Agent

· There are a lot more!

In many of these fields, there are already a large number of pre-collected empirical data sets, which are waiting to be used by carefully designed offline RL algorithms. We believe that offline reinforcement learning has broad prospects as a potential example of using a large amount of existing sequential data within the flexible decision-making framework of reinforcement learning.

If you are interested in this benchmark, you can get the code as open source on Github, and you can check our website for more details.

References:Fu J, Kumar A, Nachum O, et al. D4rl: Datasets for deep data-driven reinforcement learning[J]. arXiv preprint arXiv:2004.07219, 2020.

Open source code:https://github.com/rail-berkeley/d4rl

Donghu Robot Laboratory, 2nd Floor, Baogu Innovation and Entrepreneurship Center,Wuhan City,Hubei Province,China

Tel:027-87522899,027-87522877

Robot System Integration

Artificial Intelligence Robots

Mobile Robot

Collaborative Robotic Arm

ROS modular robot

Servo and sensor accessories

Scientific Research

Professional Co Construction

Training Center

Academic Conference

Experimental instruction

Jingtian Cup Event