US Army cadets assist in the research of the joint understanding and dialogue interface (JUDI) function of the CCDC Army Research Laboratory, which enables two-way dialogue and interaction between the soldier and the system.

Dialogue is one of the most basic ways for humans to use language, and it is an ideal function of an autonomous system. Army researchers have developed a novel dialogue function that can change the interaction between soldiers and robots and perform joint missions at combat speed.

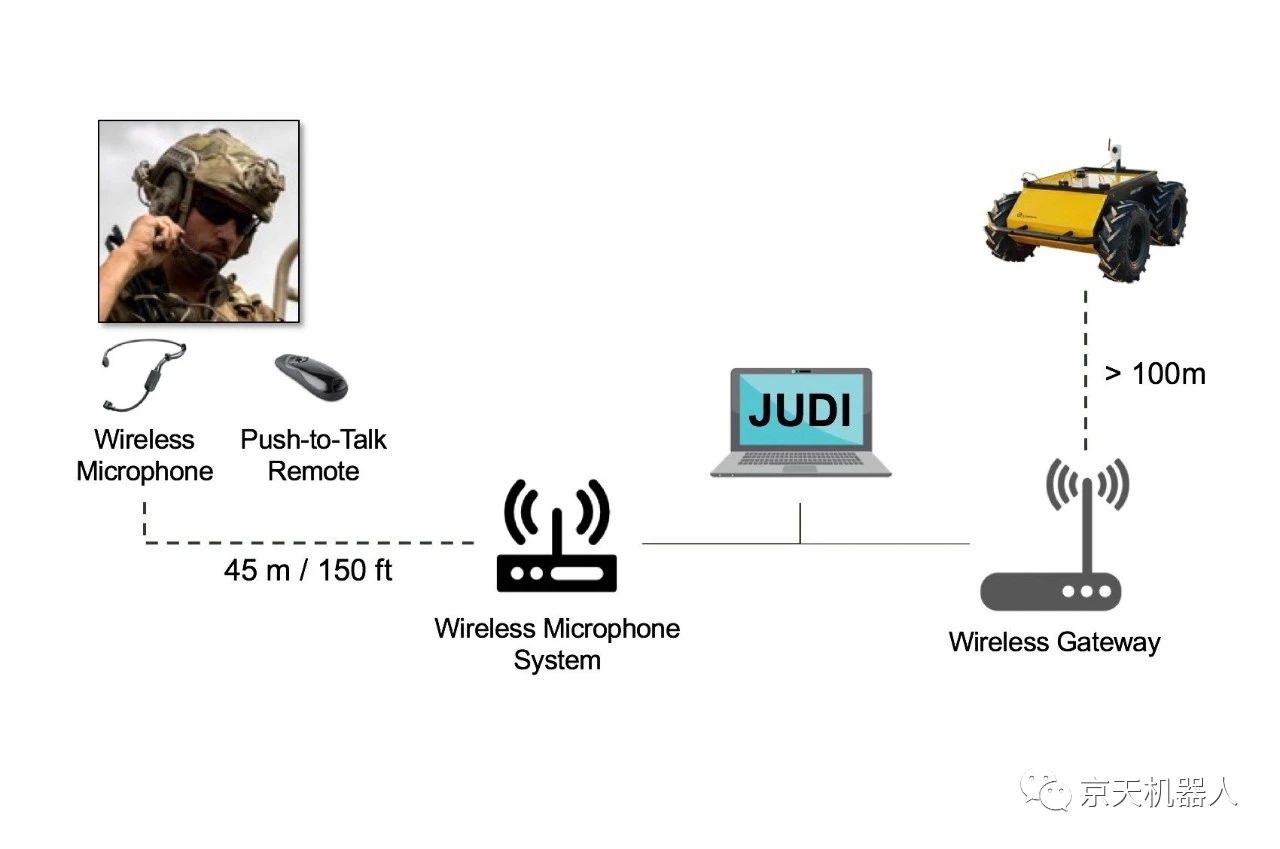

The smooth communication through dialogue will reduce the training costs of controlling autonomous systems and improve the soldier agent team. Researchers from the Army Research Laboratory of the US Army Combat Capability Development Command collaborated with the Institute of Innovative Technology at the University of Southern California to develop the "Joint Understanding and Dialogue Interface" (JUDI) capability, which enables two-way communication between soldiers and autonomous systems Dialogue and interaction.

The Institute of Innovative Technology (ICT) is a university-affiliated research center (UARC) funded by the Department of Defense, and cooperates with the Department of Defense (DOD) services and organizations. UARC is aligned with well-known institutions that conduct research at the forefront of science and innovation. ICT brings together artists from the film and game industries, as well as computer and social scientists, to research and develop immersive media for military training, health therapy, education, etc.

By reducing the burden on soldiers when working with automated systems and allowing verbal command and control systems, this work supports the Army’s priorities for modernization of next-generation combat vehicles and the Army’s priority areas of autonomy.

"Dialogue is essential for autonomous systems that operate across multiple echelons of multi-domain operations, so soldiers across land, air, sea and information space can maintain situational awareness on the battlefield," said Dr. Matthew Marg. room. "This technology allows soldiers to interact with autonomous systems through two-way voice and dialogue in tactical operations, in which verbal task instructions can be used to command and control mobile robots. In turn, this technology enables robots to clarify or Provide status updates when missions are completed, rather than relying on pre-specified, possibly outdated mission information. In this innovative approach, dialogue processing is based on statistical classification methods that explain their intentions from the soldiers’ verbal language. The classifier is trained on a small human-machine dialogue data set. In the initial stage of the experiment, human experimenters will conduct autonomous research on robots in it.

The software developed in cooperation with USC ICT utilizes the technology developed in the Institute’s Virtual Human Toolkit.

Marge said: "JUDI's ability to use natural language will reduce the learning curve for soldiers who need to control or cooperate with robots, some of which may perform different functions for tasks, such as reconnaissance or transporting supplies."

The goal is to change the way of interaction between soldiers and robots from today's head-up, manual joystick operation to head-up, without submission, so that soldiers can cooperate with one or more teams of robots while maintaining situational awareness of the surrounding environment.

According to the researchers, JUDI is different from similar research currently conducted in the commercial field.

Marge said: "The business industry is mainly focused on smart personal assistants such as Siri and Alexa. These systems can retrieve factual knowledge and perform specialized tasks such as setting reminders, but do not reason about the current actual environment." These systems also rely on cloud connectivity and large data sets with tags to learn how to perform tasks. "

In contrast, Marge said, JUDI is designed for tasks that require reasoning in the physical world. In the physical world, data is sparse because it requires prior human-computer interaction and there is almost no reliable cloud connectivity. Current intelligent personal assistants may rely on thousands of training examples, while JUDI can be customized for only hundreds (an order of magnitude smaller) tasks.

In addition, JUDI is a dialogue system that is suitable for autonomous systems such as robots, allowing it to access multiple contextual resources, such as soldiers’ voices and robot perception systems, to aid in collaborative decision-making.

This research represents the synergy of the laboratory’s Maryland location and the methods created by ARL researchers at the ARL West Laboratory in Playa Vista, California, which are the laboratory’s Human Autonomous Team (HAT) and artificial intelligence (For mobility and mobility) or AIMM (essential) as part of the research plan, and dialogue experts from USC ICT. The group's speech recognizer also utilizes a speech model developed as part of the Babel project of the "Smart Advanced Research Project Activity", which is designed for reverberant and noisy acoustic environments.

JUDI will be integrated into the CCDC ARL Autonomous Stack, which is a set of software algorithms, libraries and software components that perform specific functions required by the intelligent system, such as navigation, planning, perception, control and reasoning. The system is ten years old. Developed before, a long-term alliance of robot technology cooperation.

The successful innovations in the stack have also been successfully applied to the robotics core of the CCDC Ground Vehicle System Center.

"Once ARL develops the new functions built into the automated software stack, it will be spiraled into GVSC's robotics core, where it will be extensively tested and enhanced, and used in programs such as Combat Vehicle Robotics or CoVeR. ." said Dr. John Fossaceca, AIMM ERP project manager. "Ultimately, this will ultimately come down to the intellectual property owned by the Army and shared with industry partners as a common architecture to ensure that next-generation combat vehicles are based on best-in-class technology with modular interfaces."

Looking to the future, researchers will evaluate the robustness of the physical mobile robot platform to JUDI in a field test within the scope of AIMM ERP scheduled to be carried out in September.

Marg said: "Our ultimate goal is to make it easier for soldiers to cooperate with autonomous systems so that they can complete tasks more effectively and safely, especially in reconnaissance and search and rescue scenarios." "Knowing that soldiers can be autonomous The system has more accessible interfaces, which can be expanded and easily adapted to the task environment. I will be very pleased."

The two robots used in the experiment are equipped with the same equipment, except for the radar Velodyne VLP-16 LiDAR (left) and Ouster OS1 LiDAR (right).

The product introduced in this article is the Xuanwu No. 2 driverless car produced by our Jingtian Electric. The specific performance parameters are as follows, welcome to inquire.

Donghu Robot Laboratory, 2nd Floor, Baogu Innovation and Entrepreneurship Center,Wuhan City,Hubei Province,China

Tel:027-87522899,027-87522877

Robot System Integration

Artificial Intelligence Robots

Mobile Robot

Collaborative Robotic Arm

ROS modular robot

Servo and sensor accessories

Scientific Research

Professional Co Construction

Training Center

Academic Conference

Experimental instruction

Jingtian Cup Event