The research team at Carnegie Mellon University recently published an innovative study, which developed a real-time human to humanoid (H2O) full body remote control system. It is worth mentioning that the humanoid robots in the experiment are the H1 ReS humanoid robots from Unitree, which have also evolved faster recently (3.3 m/s) and can perform backflip in place.

This is a reinforcement learning (RL) based framework that enables real-time full body remote operation of full-size humanoid robots using only RGB cameras. To create a large-scale human motion retargeting motion dataset for humanoid robots, we propose a scalable "Sim To data" process to filter and select feasible movements using privileged motion simulators. Then, we use these fine movements to train a robust real-time humanoid motion simulator in the simulation and transmit it to a real humanoid robot in a zero sample manner. We have successfully achieved remote operation of dynamic full body movements in real scenes, including walking, back jumping, kicking, turning, waving, pushing, boxing, etc. As far as the team knows, this is the first demonstration to achieve real-time human like remote operation based on learning.

However, for a long time, the full body control of humanoid robots has been a challenge in the field of robotics, and the complexity increases when attempting to make humanoid robots mimic real-time freeform human movements.

The recent progress in reinforcement learning (RL) in humanoid robot control provides a feasible alternative solution. Firstly, reinforcement learning (RL) has been applied in the field of graphics to generate complex human actions, perform multiple tasks, and follow real-time human actions recorded by cameras in simulations.

The Human to Humanoid (H2O) system of this team is a scalable learning based system that utilizes only one RGB camera to achieve real-time full body remote control of humanoid robots. Researchers claim that through a new sim to data process and reinforcement learning, their approach solves the complex problem of converting human actions into actions that humanoid robots can perform.

Using a comprehensive full body action simulator, similar to a Persistent Humanoid Controller (PHC), the team proposed a method for training and seamless transition to real-world deployment, using zero shot learning.

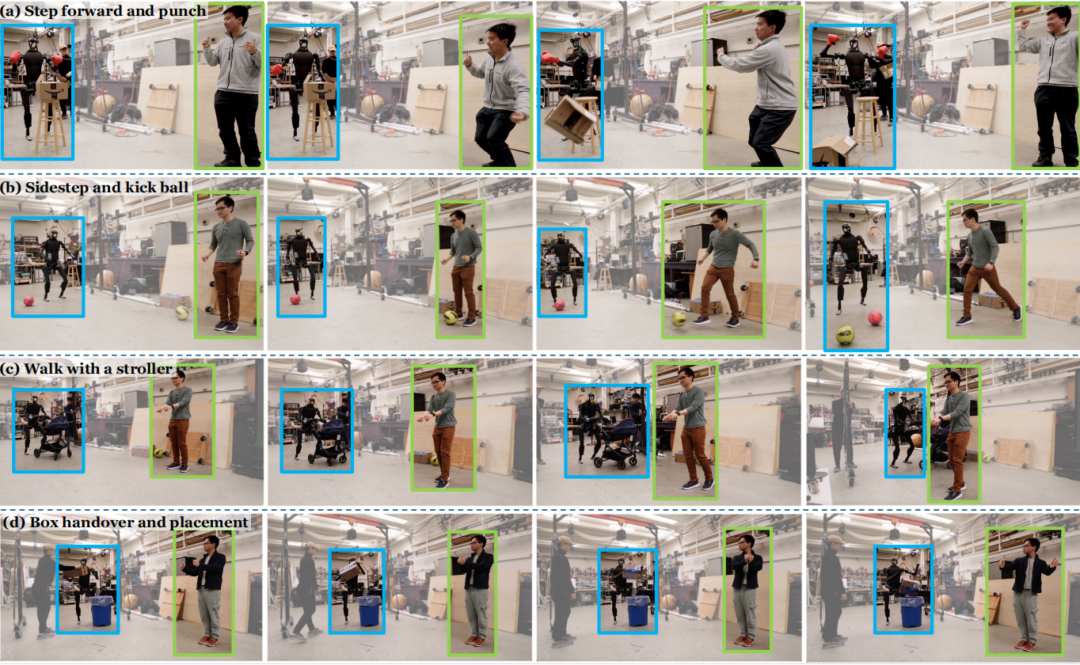

As seen in the video shared by the team, the framework involves a Unitree H1 humanoid robot, which comes with a D435 visual camera and a 360 degree LiDAR. It can achieve real-time remote control of humanoid robots through a human operator and a simple network camera interface. The system can easily be coordinated by human operators to perform diverse tasks, including picking and placing, kicking and striking actions, pushing strollers, and so on.

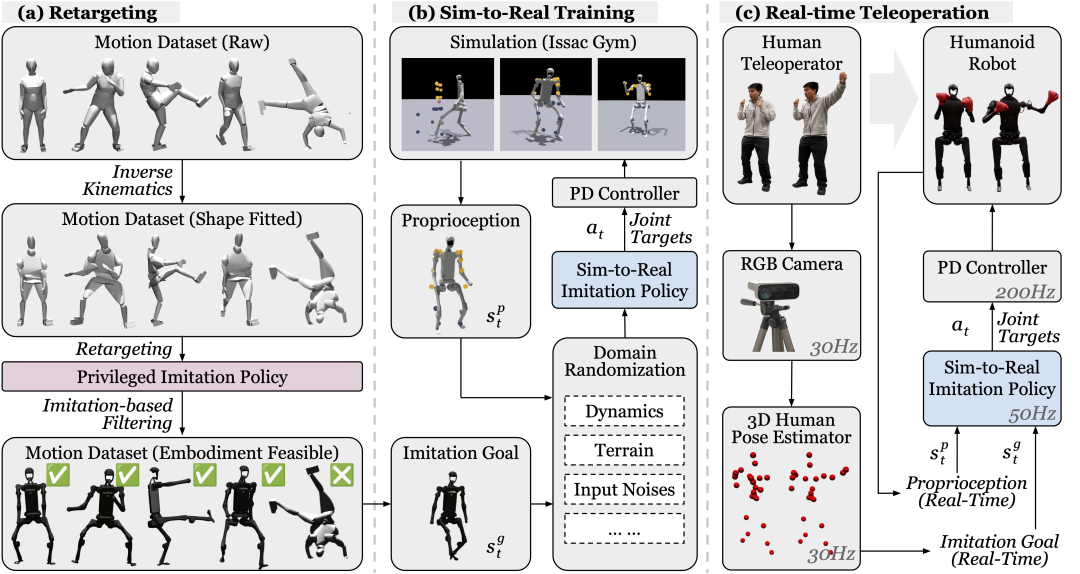

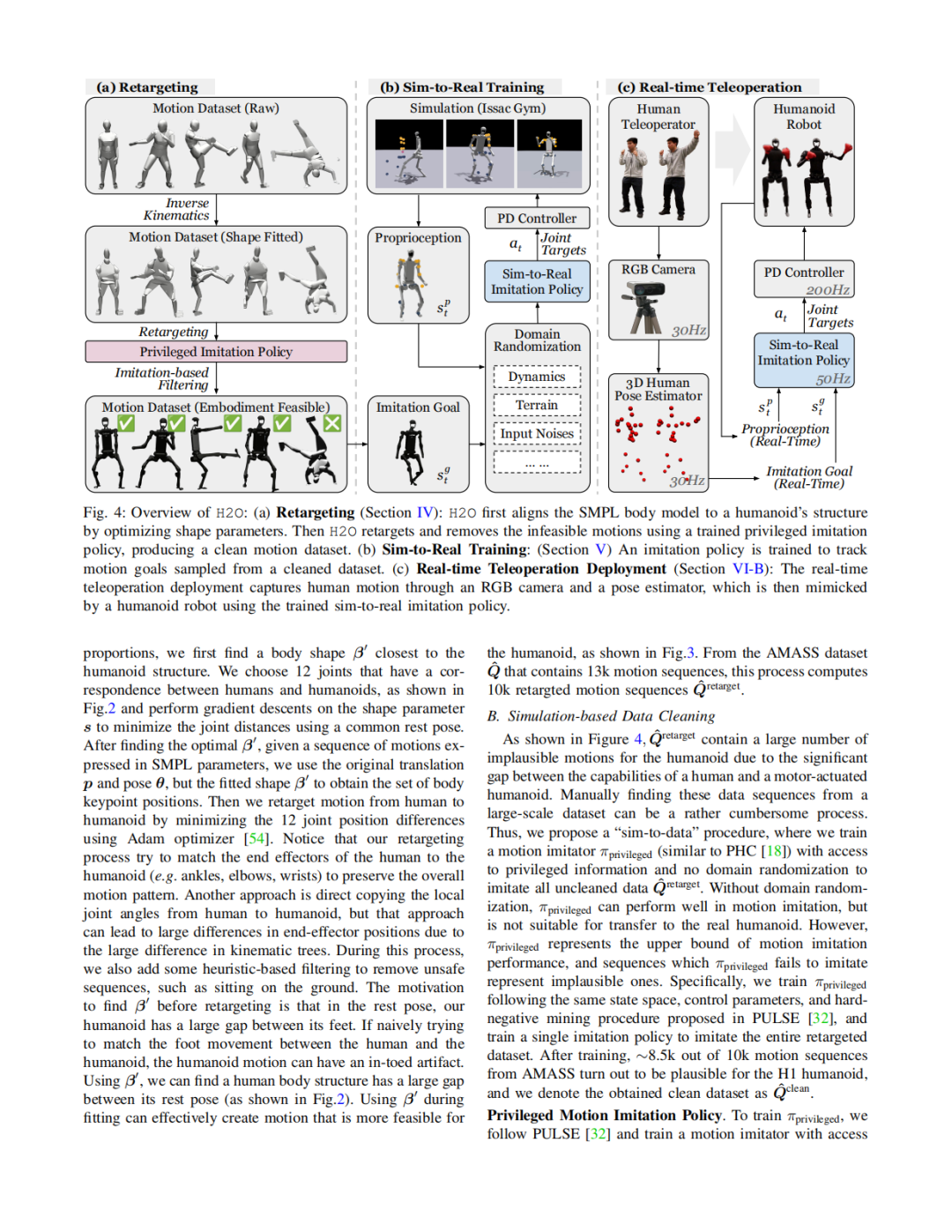

Retargeting: In the first stage, the process aligns the SMPL body model to a humanoid's structure by optimizing shape and motion parameters. The second stage refines this by removing artifacts and infeasible motions using a trained privileged imitation policy, yielding a realistic and cleaned motion dataset for the humanoid.

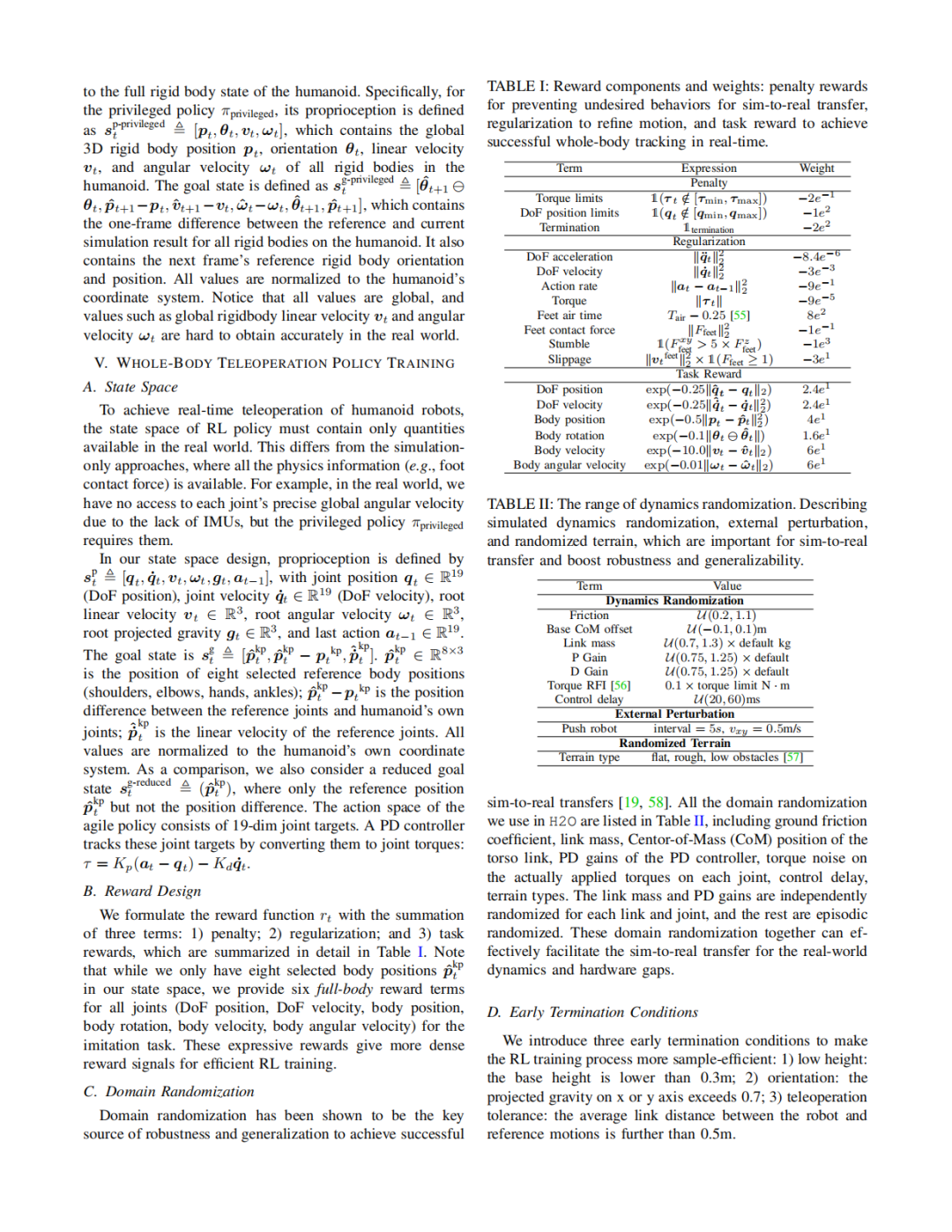

Sim-to-Real Training: A imitation policy is trained to tracking motion goals sampled from cleaned retargeting dataset.

Real-time Teleoperation Deployment: The real-time teleoperation deployment captures human motion through an RGB camera and a pose estimator, which is then mimicked by a humanoid robot using the trained sim-to-real imitation policy.

Project address:https://human2humanoid.com

Donghu Robot Laboratory, 2nd Floor, Baogu Innovation and Entrepreneurship Center,Wuhan City,Hubei Province,China

Tel:027-87522899,027-87522877

Robot System Integration

Artificial Intelligence Robots

Mobile Robot

Collaborative Robotic Arm

ROS modular robot

Servo and sensor accessories

Scientific Research

Professional Co Construction

Training Center

Academic Conference

Experimental instruction

Jingtian Cup Event