The evolution of robotics and artificial intelligence (AI) has opened the door to the development of advanced, sophisticated assistive robots capable of adapting to dynamic environments. These robots may not only provide personalized assistance but also have the ability to learn from their interactions. Assistive robots can now be designed to support individuals with disabilities or other limitations in performing daily activities and could enhance independence, mobility and overall quality of life.

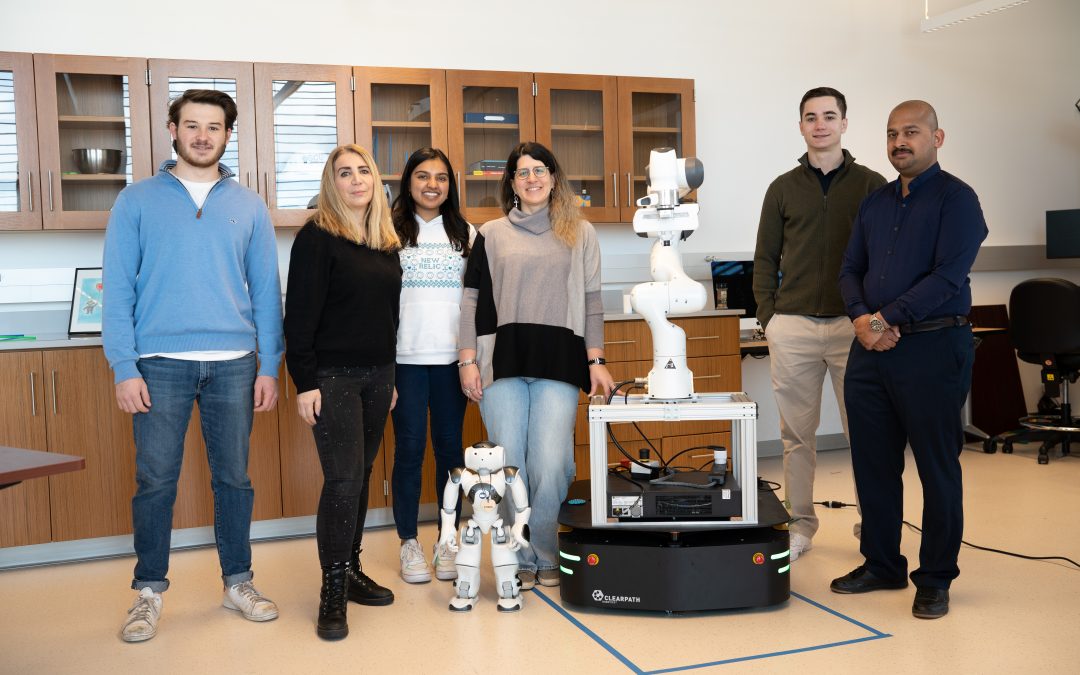

Developing assistive robots is a challenging research area, especially when integrating these systems into human environments such as homes and hospitals. To tackle these challenges, the Human-Machine Interaction & Innovation (HMI2 ) Lab at Santa Clara University is creating a versatile intelligent robot.

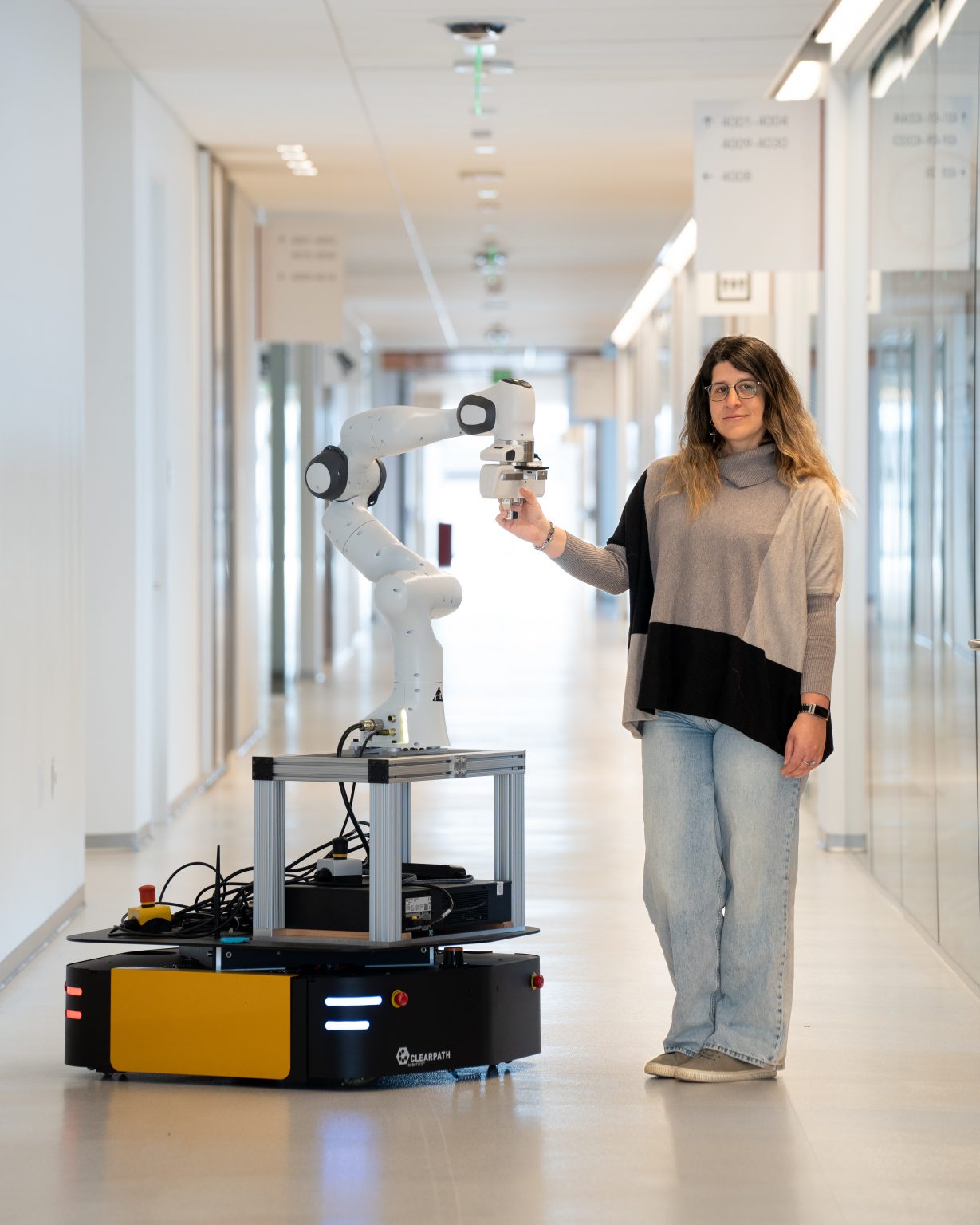

The assistive robot, named ‘Alex’, consists of Ridgeback Clearpath Robotics’s versatile indoor omnidirectional platform. The Ridgeback uses its omni-drive to move manipulators and heavy payloads with ease. In this case, the Ridgeback mobile robot has a Franka arm mounted on top to guide people in crowded environments such as hospitals. Furthermore, the system includes two cameras. The Intel D405 camera is mounted on the end-effector of the robotic arm and is used for recognizing objects in the environment and for improving the grasping points. The second camera, an Intel D455 camera, is mounted on the mobile base in order to recognize the objects in the environment and improve navigation.

As of now, the team has tested the robot in two very different but equally dynamic environments to showcase the robot’s versatility. They focused on two main tasks:

(1) Walking assistance in crowded areas such as hospitals.

(2) Collaborative cooking at home.

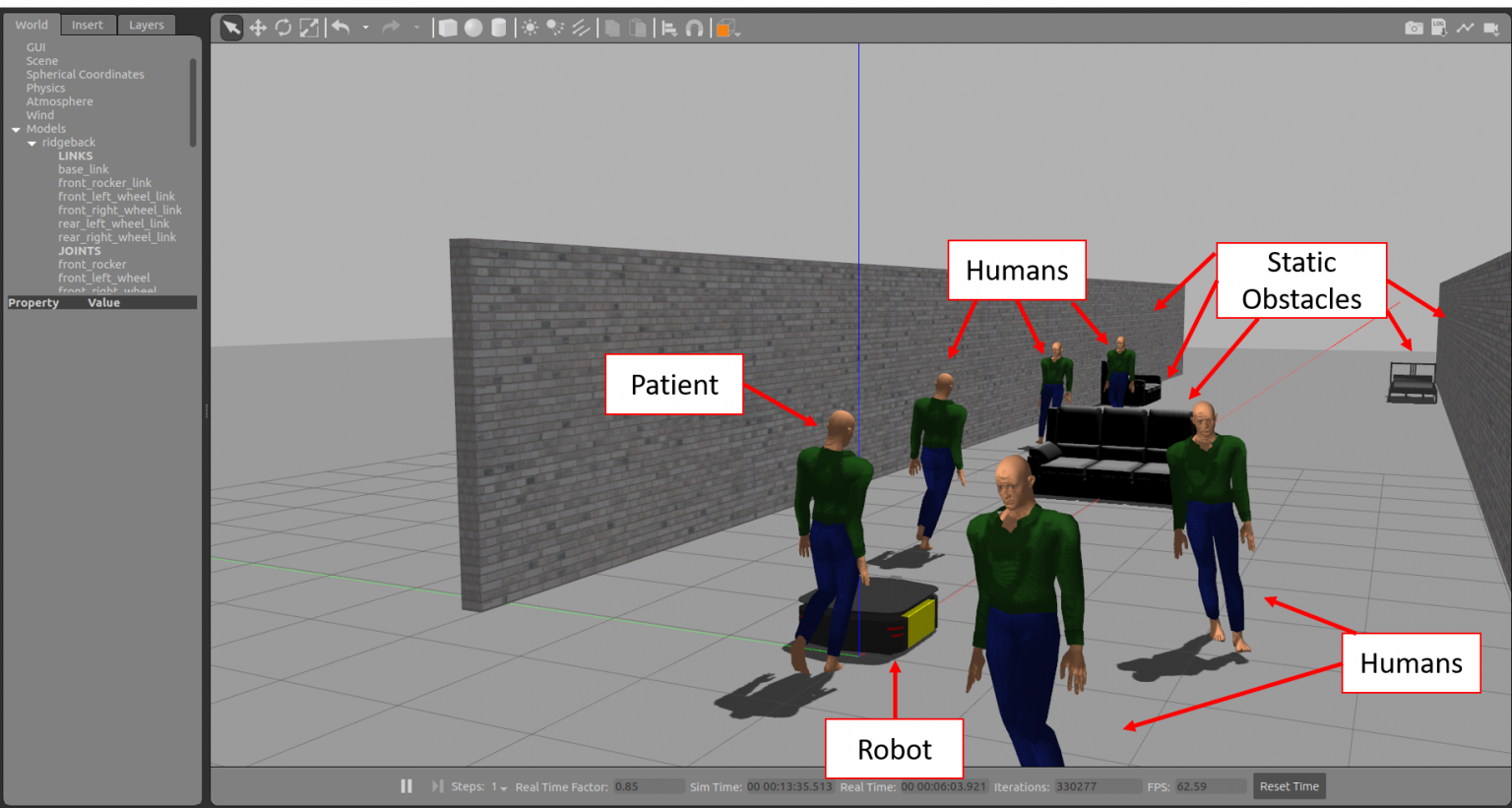

A dynamic hospital environment simulated in Gazebo

For the first task, Alex adeptly guides patients through a crowded corridor of a hospital avoiding collisions. To accomplish this task the team developed a sophisticated framework using deep reinforcement learning. The framework facilitates co-navigation between patients and robots with both static and dynamic obstacle avoidance. The framework is evaluated and implemented in Gazebo. The framework and environment are both built upon an open-source Robot Operating System (ROS).

In the second task, Alex demonstrates the ability to comprehend speech instructions from humans and fetch necessary items for collaborative cooking. Spoken language interaction is a natural way for humans to communicate with their companions. With recent breakthroughs in Natural Language Processing (NLP) with ChatGPT, humans and robots can now communicate in natural, unstructured conversations. Leveraging these tools, the team has introduced a framework called Speech2Action that uses as input spoken language and automatically generates robot actions based on the spoken instructions for a cooking task.

Here’s Alex fetching some cooking items during a collaborative cooking task:

The team conducted a study with 30 participants to evaluate their framework. The participants completed both structured and unstructured commands in a collaborative cooking task. The study showed how robot errors influence our perception of their utility and how they shape our overall point of view of these interactions. The final results showed that contrary to expectations, the majority of the participants favored an unscripted narrative, leaning towards a preference for robots to engage in spontaneous conversation and meaningful dialogue. This fosters a more human-like and adaptable exchange between humans and robots.

The successful implementation of these tasks not only demonstrates a remarkable level of the robot’s versatility but also paves the way for new possibilities in the field of human-robot interaction and assistive robotics.

The team chose Ridgeback for its reliability and Clearpath’s extensive ROS support.

“The robotic systems from Clearpath are very reliable. We have conducted more than 40 hours of user studies and the Ridgeback is outstanding. ROS support is also very helpful as we can deploy our frameworks very quickly and reliably.” – Dr. Maria Kyrarini Assistant Professor, Department of Electrical and Computer Engineering Santa Clara University

The team plans to extend their frameworks to other tasks such as robotic assistance with getting ready for work. Furthermore, they are interested in developing solutions for people who are blind or visually impaired. They plan to evaluate their systems with end-users as it is important for the team to develop inclusive robots.

The team members involved in this project consist of Maria Kyrarini (Assistant Professor), Krishna Kodur (PhD Student), Mazizheh Zand (PhD student), Aly Khater (MS Student), Sofia Nedorosleva (MS student), Matthew Tognotti (Undergraduate Student), Aidan O’Hare (Undergraduate Student), Julia Lang (Undergraduate Student).

If you would like to learn more about the Human-Machine Interaction & Innovation (HMI2 ), you can visit their LinkedIn page here.

If you would like to learn more about Ridgeback, you can visit our website here.

[1] Kodur K., Kyrarini M., 2023. Patient–Robot Co-Navigation of Crowded Hospital Environments. Applied Science 2023, 13(7), 4576.

https://www.mdpi.com/2076-3417/13/7/4576

[2] Kodur K., Zand M., and Kyrarini M., 2023. Towards Robot Learning from Spoken Language. In Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction (HRI ’23 Companion), pp. 112-116. ACM.

https://dl.acm.org/doi/10.1145/3568294.3580053

[3] Zand M., Kodur K., and Kyrarini M., 2023. Automatic Generation of Robot Actions for Collaborative Tasks from Speech. 2023 9th International Conference on Automation, Robotics and Applications (ICARA), pp. 155-159. IEEE.

https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=10125800

[4] Krishna Kodur, Manizheh Zand, Matthew Tognotti, Cinthya Jauregui, and Maria Kyrarini. Structured and Unstructured Speech2Action Frameworks for Human-Robot Collaboration: A User Study. TechRxiv. August 28, 2023. [under review]

https://www.techrxiv.org/doi/full/10.36227/techrxiv.24022452.v1

Donghu Robot Laboratory, 2nd Floor, Baogu Innovation and Entrepreneurship Center,Wuhan City,Hubei Province,China

Tel:027-87522899,027-87522877

Robot System Integration

Artificial Intelligence Robots

Mobile Robot

Collaborative Robotic Arm

ROS modular robot

Servo and sensor accessories

Scientific Research

Professional Co Construction

Training Center

Academic Conference

Experimental instruction

Jingtian Cup Event